Exploring Term-Document Matrix

This is part of a project on extracting word representations using statistical language modeling techniques. This part includes rudimentary corpus preprocessing, tokenization, vectorization, and inferences within the vector space model. The corpus is a public domain dataset of a million news headlines from the Australian Broadcasting Corporation between 2003 and 2021.

All code blocks for this part of the project are included in this document. The first block includes the imports used in this part of the project.

https://github.com/Using-Namespace-System/FeatureSpace.git

The Whole project can be cloned from the link above into a dev container and the configs will include the necessary dependencies.

from itertools import zip_longest

from matplotlib.pyplot import figure

import nltk

from nltk.corpus import stopwords

import numpy as np

import pandas as pd

from scipy.sparse import csr_array

from scipy.sparse import find

from pickleshare import PickleShareDB

df = pd.read_csv('../input/abcnews-date-text.csv')

nltk.download('stopwords')

stopwords_set = set(stopwords.words('english'))

Preprocessing the corpus is simplified to filtering out short headlines, small words, and stop-words. Each action is completed in pandas, I believe this may improve readability. The documents are exploded into a single series representing the whole corpus. From here stop-words can be filtered out. No further sanitation is performed.

#tokenize and sanitize

#tokenize documents into individual words

df['tokenized'] = df.headline_text.str.split(' ')

#remove short documents from corpus

df['length'] = df.tokenized.map(len)

df = df.loc[df.length > 1]

#use random subset of corpus

df=df.sample(frac=0.50).reset_index(drop=True)

#flatten all words into single series

ex = df.explode('tokenized')

#remove shorter words

ex = ex.loc[ex.tokenized.str.len() > 2]

#remove stop-words

ex = ex.loc[~ex.tokenized.isin(stopwords_set)]

#create dictionary of words

#shuffle for sparse matrix visual

dictionary = ex.tokenized.drop_duplicates().sample(frac=1)

#dataframe with (index/code):word

dictionary = pd.Series(dictionary.tolist(), name='words').to_frame()

#store code:word dictionary for reverse encoding

dictionary_lookup = dictionary.to_dict()['words']

#offset index to prevent clash with zero fill

dictionary['encode'] = dictionary.index + 1

#store word:code dictionary for encoding

dictionary = dictionary.set_index('words').to_dict()['encode']

#use dictionary to encode each word to integer representation

encode = ex.tokenized.map(dictionary.get).to_frame()

encode.index.astype('int')

encode.tokenized.astype('int')

#un-flatten encoded words back into original documents

docs = encode.tokenized.groupby(level=0).agg(tuple)

#match up document indexes for reverse lookup

df = df.sort_index().iloc[docs.index].reset_index(drop=True)

docs = docs.reset_index()['tokenized']

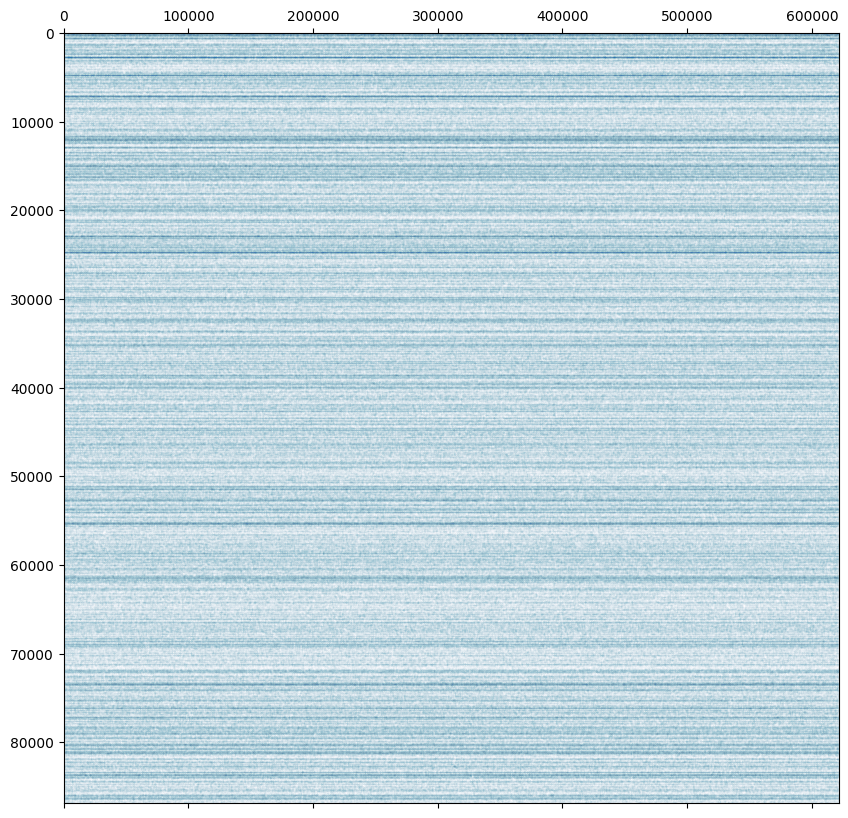

In this instance the word vector is a count vector. This is similar to one-hot but is able to convey how many times the term occurred in the document.

For the news headline dataset, document-wise term repetition is minimal and the statistical weight it provides is negligible.

#zero pad x dimension by longest sentence

encoded_docs = list(zip(*zip_longest(*docs.to_list(), fillvalue=0)))

#convert to sparse matrix

encoded_docs = csr_array(encoded_docs, dtype=int)

#convert to index for each word

row_column_code = find(encoded_docs)

#presort by words

word_sorted_index = row_column_code[2].argsort()

doc_word = np.array([row_column_code[0][word_sorted_index], row_column_code[2][word_sorted_index]])

#presort by docs and words

doc_word_sorted_index = doc_word[0].argsort()

doc_word = pd.DataFrame(np.array([doc_word[0][doc_word_sorted_index], doc_word[1][doc_word_sorted_index]]).T, columns=['doc','word'])

#offset code no longer needed after zero-fill

doc_word.word = doc_word.word - 1

#convert to index of word counts per document

doc_word_count = doc_word.groupby(['doc','word']).size().to_frame('count').reset_index().to_numpy().T

#convert to sparse matrix

sparse_word_doc_matrix = csr_array((doc_word_count[2],(doc_word_count[0],doc_word_count[1])), shape=(np.size(encoded_docs, 0),len(dictionary)), dtype=float).T

#visualize sparse matrix

fig = figure(figsize=(10,10))

sparse_word_doc_matrix_visualization = fig.add_subplot(1,1,1)

sparse_word_doc_matrix_visualization.spy(sparse_word_doc_matrix, markersize=0.007, aspect = 'auto')

%store sparse_word_doc_matrix

%store dictionary

%store dictionary_lookup

Out[ ]:

The words that occur together often in the corpus also, as word vectors, are closer together in this 600000 dimensional vector space. This is demonstrated in the table below.

In [ ]:

#approximating cosine similarity with dot product of the term document matrix and its transform

similarity_matrix = sparse_word_doc_matrix @ sparse_word_doc_matrix.T

#displaying slice of matrix with highest similarity scores

similarity_matrix_compressed = similarity_matrix[(-similarity_matrix.sum(axis = 1)).argsort()[:6]].toarray()

pd.DataFrame((-similarity_matrix_compressed).argsort(axis = 1)[:6,:6].T).applymap(dictionary_lookup.get)

| 0 | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|

| 0 | police | new | man | says | court | nsw |

| 1 | man | zealand | charged | govt | man | rural |

| 2 | investigate | laws | police | minister | accused | police |

| 3 | probe | police | court | australia | face | govt |

| 4 | missing | cases | murder | new | told | country |

| 5 | search | york | jailed | trump | faces | coast |

Similarly, the documents with the most similarities are closer together in the vector space.

#previewing document similarity

doc_similarity_matrix = sparse_word_doc_matrix.T @ sparse_word_doc_matrix

#displaying slice of matrix with highest similarity scores

doc_similarity_matrix_compressed = doc_similarity_matrix[(-doc_similarity_matrix.sum(axis = 1)).argsort()[:6]].toarray()

pd.DataFrame((-doc_similarity_matrix_compressed).argsort(axis = 1)[:6,:6].T).applymap(df.headline_text.to_dict().get)

| 0 | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|

| 0 | man bites police officer on new years day in a... | police search for man over police van crash | police search for man 'involved in police chas... | police union backs new police leadership team | new south wales government setting up new poli... | police investigate report of man impersonating... |

| 1 | new half day public holidays on christmas eve ... | police search for man 'involved in police chas... | police search for man over police van crash | police union demands answers over appointment ... | new south wales records 1485 new cases of covi... | police charge man for impersonating officer |

| 2 | istanbul police arrest new years day gunman and | police search for police assault suspect | police search for man over fatal sydney stabbing | police union slams police commissioner for cho... | new south wales covid coronavirus five new loc... | queensland police describe man police believe |

| 3 | police make new years day drink driving arrests | police investigate report of man impersonating... | police investigate report of man impersonating... | nt police urge examination of new police numbers | new president for new south wales farmers | man charged with impersonating police officer |

| 4 | new police information about tasmanian man mis... | queensland police describe man police believe | police to search sydney creek for missing man | wa police union president harry arnott stood a... | a new dairy body in new south wales has received | police search for man 'involved in police chas... |

| 5 | brother of man fatally stabbed on new years da... | police investigation after man struck by polic... | queensland police describe man police believe | wa police commissioner backs down on new polic... | new south wales retains top ranking in new two... | man charge with impersonating police officer a... |